2023. 11. 21. 17:21ㆍML&DL/CV

[실내 이미지 Object Detection]

실내 객체 10가지 클래스 검출

0: door

1: openedDoor

2: cabinetDoor

3: refrigeratorDoor

4: window

5: chai

6: table

7: cabinet

8: sofa/couch

9: pole

데이터셋

https://www.kaggle.com/datasets/thepbordin/indoor-object-detection

Indoor Objects Detection

Indoor objects dataset for YOLOv5 format

www.kaggle.com

- 데이터셋 구조

INDOOR

├─train

│ ├─images

│ └─labels

├─valid

│ ├─images

│ └─labels

├─test

│ ├─images

│ └─labels

└─indoor.yaml

모델

* yolo v8n

- Ultralytics YOLO 를 사용해서 모델을 불러옴

https://github.com/ultralytics/yolov5

GitHub - ultralytics/yolov5: YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite. Contribute to ultralytics/yolov5 development by creating an account on GitHub.

github.com

💻 실습

* Yolov8을 사용하기 위한 패키지를 설치해줌

!pip install ultralytics

* 데이터 확인

- Colab 환경에서는 이미지 출력을 위해서 display() 사용해야함

from glob import glob

from PIL import Image, ImageDraw, ImageFont

import numpy as np

from tqdm import tqdm

from IPython.display import display

np.random.seed(724)

dir_main = "/content/drive/MyDrive/INDOOR/"

filenames_image = glob(f"{dir_main}/train/images/f2b31981e52820b1.jpg")

filenames_label = [filename.replace('images', 'labels').replace('jpg', 'txt') for filename in filenames_image]

cnt = 0

classes = ["door", "openedDoor", "cabinetDoor", "refrigeratorDoor", "window", "chair", "table", "cabinet", "sofa/couch", "pole"]

color = []

for _ in range(10):

c = list(np.random.choice(range(256), size=3)) + [255]

c = tuple(c)

color.append(c)

def draw_bbox(draw, bbox, label, color=(0, 255, 0, 255), confs=None, size=15):

draw.rectangle(bbox, outline=color, width =3)

def set_alpha(color, value):

background = list(color)

background[3] = value

return tuple(background)

background = set_alpha(color, 50)

draw.rectangle(bbox, outline=color, fill=background, width =3)

background = set_alpha(color, 150)

text = f"{label}" + ("" if confs==None else f":{conf:0.4}")

text_bbox = bbox[0], bbox[1], bbox[0]+len(text)*10, bbox[1]+25

draw.rectangle(text_bbox, outline=color, fill=background, width =3)

draw.text((bbox[0]+5, bbox[1]+5), text, (0,0,0))

cnt = 5

for filename_image, filename_label in tqdm(zip(filenames_image, filenames_label)):

img = Image.open(filename_image)

img = img.resize((640, 640))

width, height = img.size

draw = ImageDraw.Draw(img, 'RGBA')

with open(filename_label, 'r') as f:

labels = f.readlines()

labels = list(map(lambda s: s.strip().split(), labels))

for label in labels:

cls = int(label[0])+1

x, y, w, h = map(float, label[1:])

x1, x2 = width * (x-w/2), width * (x+w/2)

y1, y2 = height * (y-h/2), height * (y+h/2)

x1, y1, x2, y2 = map(int, [x1, y1, x2, y2])

draw_bbox(draw, bbox=(x1, y1, x2, y2), label=classes[cls], color=color[cls], size=15)

display(img) #코랩 환경

#img.show()

cnt -= 1

if cnt == 0:

break- label 의 텍스트 파일에는 이미지 내의 객체에 대한 좌표 값이 저장되어있음

- label.txt 를 사용해 각 image에 라벨링

* yaml 파일

: yaml 파일은 데이터셋이 어떻게 구성되어 있는지 정의해주는 파일

- train/val/test 데이터셋이 있는 경로를 지정해줌

- nc : 클래스 수

- name : 각 클래스 이름

* yaml 생성

config_txt = '''

path: /content/drive/MyDrive/INDOOR # dataset root dir

train: train/images # train images (relative to 'path')

val: valid/images # val images (relative to 'path')

test: test/images # test images (optional)

# Classes

names:

0: door

1: openedDoor

2: cabinetDoor

3: refrigeratorDoor

4: window

5: chair

6: table

7: cabinet

8: sofa/couch

9: pole

'''

with open("/content/drive/MyDrive/INDOOR/indoor.yaml", 'w') as f:

f.write(config_txt)=> config_txt에 내용 작성해주고, f.write를 사용해서 .yaml 파일 생성

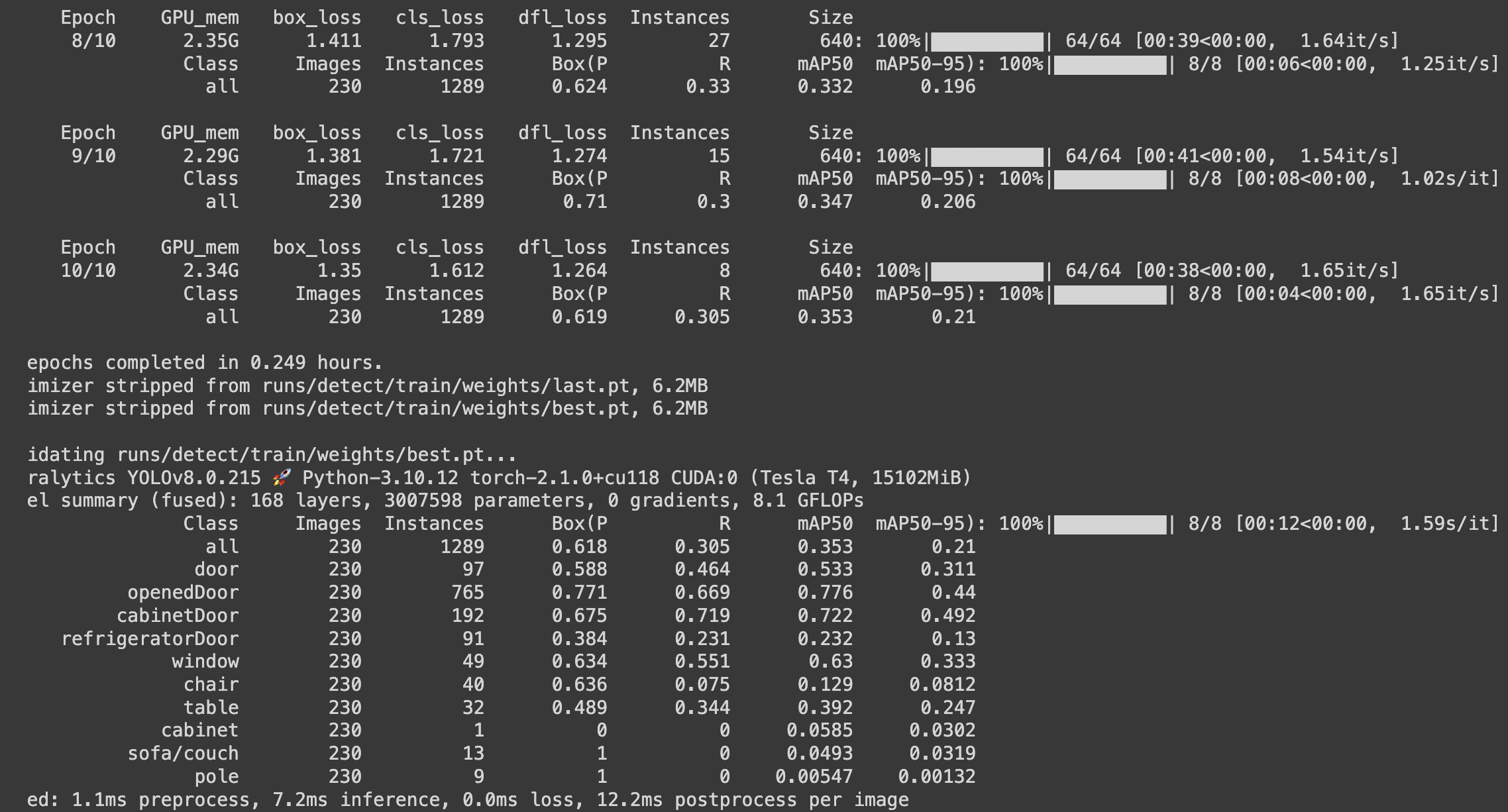

* 모델 불러오기 / 학습

- 에포크 10번

from ultralytics import YOLO

# Create a new YOLO model from scratch

model = YOLO('yolov8n.yaml')

# Load a pretrained YOLO model (recommended for training)

model = YOLO('yolov8n.pt')

# Train the model using the 'indoor.yaml' dataset for 10 epochs

results = model.train(data='/content/drive/MyDrive/INDOOR/indoor.yaml', epochs=10)

# Evaluate the model's performance on the validation set

results = model.val()

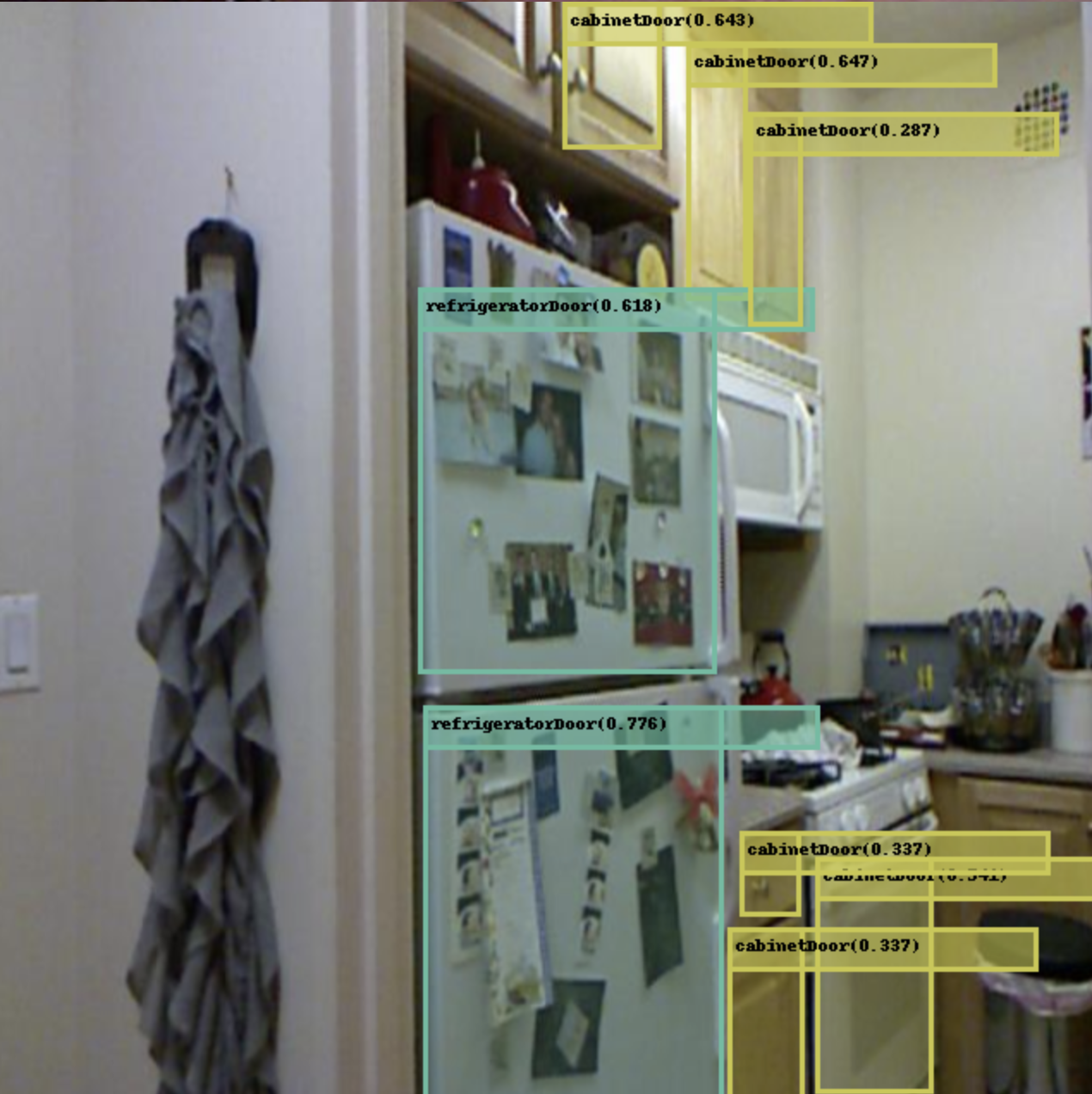

* 모델 테스트

from ultralytics import YOLO

from PIL import Image, ImageDraw, ImageFont

from glob import glob

import yaml

import numpy as np

from IPython.display import display

#np.random.seed(724)

# Load a model

model = YOLO('/content/runs/detect/train/weights/best.pt')

classes = ["door", "openedDoor", "cabinetDoor", "refrigeratorDoor", "window", "chair", "table", "cabinet", "sofa/couch", "pole"]

# Load images

filelist = glob("/content/drive/MyDrive/INDOOR/test/images/*.png")

filelist = np.random.choice(filelist, size=20)

imgs = [Image.open(filename) for filename in filelist]

# Perform object detection on an image using the model

results = model(imgs)

def draw_bbox(draw, bbox, label, color=(0, 255, 0, 255), confs=None, size=12):

#font = ImageFont.truetype("/usr/share/fonts/truetype/dejavu/DejaVuMathTeXGyre.ttf", size)

draw.rectangle(bbox, outline=color, width =3)

def set_alpha(color, value):

background = list(color)

background[3] = value

return tuple(background)

background = set_alpha(color, 50)

draw.rectangle(bbox, outline=color, fill=background, width =3)

background = set_alpha(color, 150)

text = f"{label}" + ("" if confs==None else f"({conf:0.3})")

text_bbox = bbox[0], bbox[1], bbox[0]+len(text)*10, bbox[1]+25

draw.rectangle(text_bbox, outline=color, fill=background, width =3)

draw.text((bbox[0]+5, bbox[1]+5), text, (0,0,0))

color = []

n_classes = len(classes)

for _ in range(n_classes):

c = list(np.random.choice(range(256), size=3)) + [255]

c = tuple(c)

color.append(c)

for img, result in zip(imgs, results):

img = img.resize((640, 640))

width, height = img.size

draw = ImageDraw.Draw(img, 'RGBA')

result = result.cpu()

xyxys = result.boxes.xyxyn # box with xyxy format but normalized, (N, 4)

confs = result.boxes.conf # confidence score, (N, 1)

clss = result.boxes.cls # cls, (N, 1)

xyxys = xyxys.numpy()

clss = map(int, clss.numpy())

for xyxy, conf, cls in zip(xyxys, confs, clss):

xyxy = [xyxy[0]*width, xyxy[1]*height, xyxy[2]*width, xyxy[3]*height]

draw_bbox(draw, bbox=xyxy, label=classes[cls+1], color=color[cls], confs=confs, size=15)

# 이미지를 표시하는 대신, IPython.display 모듈을 사용하여 이미지를 출력합니다.

display(img)

'ML&DL > CV' 카테고리의 다른 글

| [Computer Vision] COCO 데이터셋 (2) | 2023.11.24 |

|---|---|

| [Binary Classification] MRI 데이터셋을 사용한 뇌종양 음성/양성 모델 (0) | 2023.11.22 |

| [Computer Vision] Object Detection (객체 검출) (1) | 2023.11.21 |

| [Multi-Class Classification] 재활용 쓰레기 분리수거 모델 (1) | 2023.11.21 |

| [Computer Vision] Classification (0) | 2023.11.21 |